Table of Contents:

- What is Spark Performance Tuning?

- The Challenge of Apache Spark Performance Tuning

- Choosing the Partition and Executor Size

- Real-Time Cost Optimization Solution for Spark Performance Tuning

- Case Study: How Extole Saved 30%

Apache Spark is an open-source, distributed application framework designed to run big data workloads at a much faster rate than Hadoop and with fewer resources. Spark leverages in-memory and local disk caching, along with optimized execution plans, to perform computation quickly against data of any size. Thus, it is imperative that Spark users learn and master Spark tuning if they want to get the most out of their Spark environments.

However, Apache Spark can be very complex, and it can present a wide range of problems—cost overruns, underutilized resources, poor application performance—if not properly optimized.

That’s why Spark optimization through Spark performance tuning is imperative to ensure that you are not overpaying for unused cloud resources.

What is Spark Performance Tuning?

Spark performance tuning is the process of making rapid and timely changes to Spark configurations so that all processes and resources are optimized and function smoothly. To ensure peak performance and avoid costly resource bottlenecks, Spark tuning involves careful calibration of memory allocations, core utilization, and instance configurations. This Spark optimization process enables users to achieve SLA-level Spark performance while mitigating resource bottlenecks and preventing performance issues.

The Challenge of Apache Spark Performance Tuning

The State of FinOps 2024 Report found that reducing waste or unused resources was the highest priority among respondents. With increased Spark usage, managing the price and performance of applications can become a major challenge.

Cloud platforms such as Amazon EMR or Amazon EKS offer a variety of infrastructure-level optimizations, but there is even more that can be done within the Spark applications themselves to addressfix the issue of wasted capacity. Without the proper direction, any attempts at Spark monitoring could quickly prove futile and costly both in time and resources spent.

Here are some Apache Spark optimization techniques to help eliminate overprovisioning and overspending on application costs:

-

- Sizing Spark executors and partitions. We’ll look at how sizing for executors and partitions is interrelated and the implications of incorrect (or nonoptimal) choices. We’ll also provide a heuristic that we’ve found to be effective for our own Spark workloads.

- Using Pepperdata Capacity Optimizer. Capacity Optimizer is the easiest and most practical Spark optimization solution for organizations with a large number of Spark applications. It is real-time, autonomous cost optimization for Spark workloads on Amazon EMR and EKS—ensuring that resources are utilized to the maximum extent possible.

Before getting into the details, let’s review a few Spark terms and definitions:

Stage

Even expert developers encounter challenges in optimizing applications because it’s not always clear what resources an application will use. Spark developers have a lot of things to worry about when processing huge amounts of data: how to efficiently source the data, perform extract, transform, and load (ETL) operations, and validate datasets at a very large scale. But while they’re making sure that their code is bug free and maintained in all the necessary environments, they often overlook tasks such as tuning Spark application parameters for optimal performance.

When done properly, tuning Spark applications lowers resource costs while maintaining SLAs for critical processes, which is a concern for both on-premises and cloud environments. For on-premises Hadoop environments, clusters are typically shared by multiple apps and their developers. If one developer’s applications start to consume all the available resources, it slows down everyone’s applications and increases the risk of task failures.

In the cloud, optimizing resource usage through tuning translates directly to reduced bills at the end of the month. However, cloud cost optimization through manual Spark performance tuning can only go so far—the constant fluctuations in compute and memory requirements are impossible to continuously control without automation, and can cause the costs of your Spark applications on Amazon EMR or EKS to skyrocket.

A Spark application is divided into stages. A stage is a step in the physical execution plan. It ends when a shuffle is required (a ShuffleMapStage) or when the stage writes its result and terminates as expected (a ResultStage).

Task

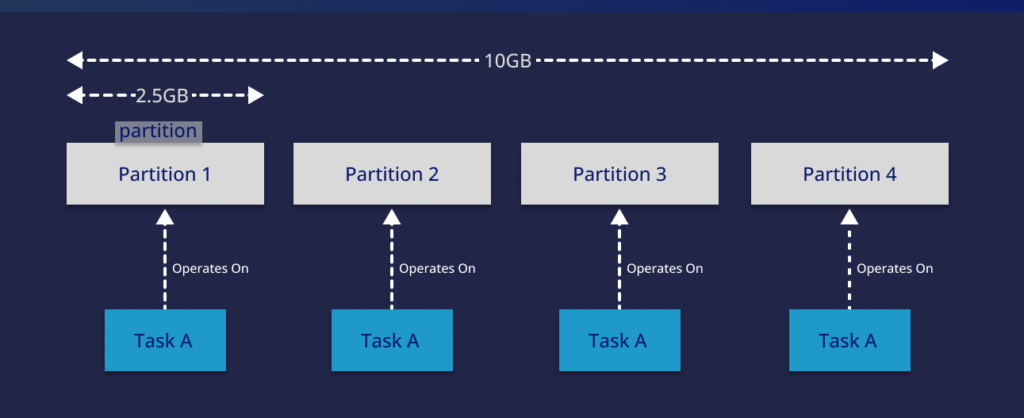

Each stage is divided into tasks that are executed in parallel—one task per partition. Tasks are executed by the executors.

Executor

Executors are the workers that execute tasks. Resources (memory and CPU cores) are allocated to executors by the developer before runtime.

Partition

Partitions are logical chunks of data—specifically, chunks of a Resilient Distributed Dataset (RDD)—which can be configured by the developer before runtime. The number of partitions in an RDD determines the number of tasks that will be executed in a stage. For each partition, a task is given to an executor to execute.

Because a Spark application can consist of many different types of stages, the configuration that’s optimal for one stage might be inappropriate for another stage. Therefore, Spark memory optimization techniques for Spark applications have to be performed stage by stage.

Executor and Partition Sizing

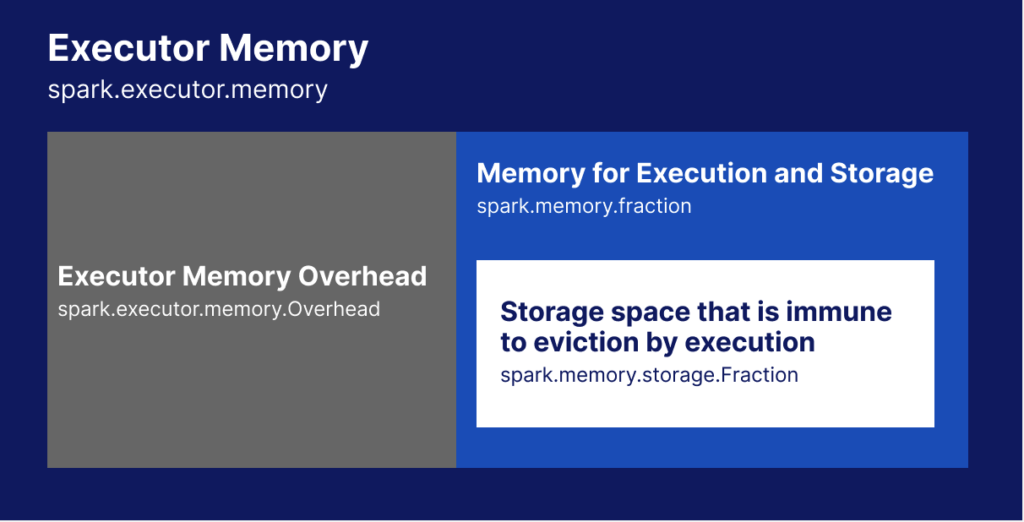

Executor and partition sizing are two of the most important factors that a developer has control over with Spark tuning. To understand how they are related to each other, we first need to understand how Spark executors use memory. Figure 2 shows the different regions of Spark executor memory.

There is a single parameter that controls the portion of executor memory reserved for both execution and storage: spark.memory.fraction. So if we want to store our RDDs in memory, our executors need to be large enough to handle both storage and execution. Otherwise, we run the risk of errors (in data/calculations and task failures due to lack of resources) or having a long runtime for apps.

On the other hand, the larger the executor size, the fewer executors that can run simultaneously in the cluster. That is, large executor sizes frequently cause suboptimal execution speed due to a lack of task parallelism.

There’s also the problem of choosing the number of CPU cores for each executor, but the choices are limited. Typically, a value from 1-4 cores/executor will provide a good baseline to help achieve full write throughput.

Choosing the Partition and Executor Size

One of the best Spark memory optimization techniques when dealing with partitions and executors is to first choose the number of partitions, then pick an executor size to meet the memory requirements.

Choosing the Number of Partitions

Partitions control how many tasks will execute on the dataset for a particular stage. Under optimal conditions with little or no friction (network latency, host issues, and the overhead associated with task scheduling and distribution), assigning the number of partitions to be the number of available cores in the cluster would be ideal. In this case, all the tasks would start at the same time, and they would all finish at the same time, in a single step.

However, real environments are not optimal. When Spark tuning, it is important to consider that:

- Executors don’t finish the tasks at the same speed. Straggler tasks are tasks that take significantly longer than the other application tasks to execute. To combat this, configure the number of partitions to be more than the number of available cores so that the fast hosts work on more tasks than the slow hosts.

- There is overhead associated with sending and scheduling each task. If we run too many tasks, the increased overhead takes a larger percentage of overall resources, and the result is a significant increase in app runtimes.

When using Spark optimization techniques, remember this rule of thumb: For large datasets—larger than the available memory on a single host in the cluster—always set the number of partitions to be 2 or 3 times the number of available cores in the cluster.

However, if the number of cores in the cluster is small and there’s a huge dataset, choosing the number of partitions that results in partition sizes that are equal to a certain disk block size, such as 128 MB, has some advantages in regards to I/O speed.

Choosing an Executor Size

As we’ve discussed, Spark tuning also involves giving executors enough memory to handle both storage and execution. So when choosing executor size, consider the partition size, the entire dataset size, and whether data will be cached in memory.

To ensure that tasks execute quickly, avoiding disk spills is important. Disk spills occur when executors have insufficient memory. This forces Spark to “spill” some of the tasks to disk during runtime.

In our experiments, we’ve found that a good choice for executor size is the smallest size that does not cause disk spills. Picking too large a value might mean too few executors would be used. Finding the right size that avoids disk spills requires some experimentation.

Why Dynamic Allocation Doesn’t Solve Overallocation in Spark

Dynamic Allocation is a feature of Spark that enables the scheduler to automatically add more tasks to executors and kill idle executors based on varying workload requirements. Because Dynamic Allocation ensures that resources are only provisioned when required, it can help prevent resource overprovisioning. Dynamic Allocation can also help accelerate application execution times, especially during peak workloads.

However, Dynamic Allocation does have some downsides. Along with the additional complexity that Dynamic Allocation introduces, it can also result in underutilization during periods of low workload if the cluster does not release resources promptly when no longer needed.

Dynamic Allocation also doesn’t address a fundamental problem that Spark tasks can waste the resources requested by the scheduler. In Pepperdata’s experience, these wasted resources can amount to as much as half of the provisioned resources, even when Dynamic Allocation is enabled.

Spark on Kubernetes: Performance Optimization a Must

It’s hard to discuss Spark without including Kubernetes in the conversation. Many users run Spark with Kubernetes, with the latter providing automated and seamless application deployment, management, and scaling. As Kubernetes is an open-source framework, users deploying Spark on Kubernetes benefit from its automation and easy administration without the added costs.

That said, an unoptimized Spark-Kubernetes configuration can result in poor allocation and utilization of resources. When users deploy Spark on Kubernetes without any Spark performance tuning, this can lead to poor performance and runaway costs.

It’s crucial for developers to optimize both Spark and Kubernetes to maximize the benefits of both tools, enhance performance, and achieve desired outputs while keeping costs manageable. Click here for a quick guide to getting started with Spark on Kubernetes.

Pepperdata Capacity Optimizer: The Only Real-Time Cost Optimization Solution for Spark Performance Tuning

For clients ranging from global giants to tech startups, Pepperdata Capacity Optimizer is the only Spark performance tuning solution that continuously and autonomously reduces instance hours and costs by improving resource utilization in real time. By informing the scheduler of exact resource usage and ensuring that every instance is fully utilized before a new instance is added, Pepperdata delivers 30 to 47 percent immediate cost savings with no manual application tuning and offers a guaranteed ROI between 100 and 662 percent.

Traditional infrastructure optimizations don’t eliminate the problem of application waste due to unused capacity at the Spark task and executor level. By autonomously and continuously reclaiming waste from Spark applications, Pepperdata Capacity Optimizer automatically closes this gap and empowers you to:

- Reduce instance hours and costs

- Optimize Spark clusters for efficiency

- Redirect IT resources to higher value-added tasks

Capacity Optimizer increases the virtual capacity of every node in real time to help reduce your hardware usage—ultimately decreasing your instance hours consumption and creating automatic, continuous cost savings.

Through patented algorithms, Pepperdata’s Continuous Intelligent Tuning also completely eliminates the need for doing manual tuning, applying recommendations, or making application code changes in order to optimize the price/performance of your Spark clusters.

Case Study: How Extole Saved 30% on Its Spark Application Costs Running on Amazon EMR

Before bringing in Pepperdata, Extole had budgeted between 10 and 25 percent of Apache Spark machine resources as idle overhead for ad hoc reports.

Before bringing in Pepperdata, Extole had budgeted between 10 and 25 percent of Apache Spark machine resources as idle overhead for ad hoc reports.

Within five days of implementing Pepperdata Capacity Optimizer, the Extole engineering team was able to run up to 27 percent more Spark jobs without increased cloud costs and without the need for ongoing manual tuning.

Check out the case study for more information on how Capacity Optimizer delivered greater throughput without increasing costs.

Give us 6 Hours—We’ll Save You 30%

Pepperdata typically installs in under 60 minutes in most enterprise environments. We guarantee a minimum of 100 percent ROI, with a typical ROI between 100 percent and 660 percent.

If you’re running Apache Spark, give us 6 hours—we’ll save you 30 percent on top of all the other optimizations you’ve already done. Visit the Proof-of-Value page to learn more about getting started with a free, no-risk trial or contact sales@pepperdata.com for more information.

Contact Pepperdata today for more information on how to fully leverage the Spark framework and how our Spark optimization solution can help you get more from your Spark workloads.