Apache Spark versus Kubernetes? Or both? The past few years have seen a dramatic increase in companies deploying Spark on Kubernetes (K8s). This isn’t surprising, considering the benefits that K8s brings to the table. Adopting Kubernetes can help improve resource utilization and reduce cloud expenses, a key initiative in many organizations given today’s economic climate.

Fueled by widespread migration to the cloud, the number of companies that specifically deploy Spark on Kubernetes is growing, with 61 percent of Kubernetes professionals saying that they’re running stateful applications like Spark on K8s. However, enterprises that run Spark with Kubernetes must be ready to address the challenges that come with this solution. Above all, they need to have observability into their infrastructure and easily optimize multiple aspects of its performance.

What is Spark on Kubernetes?

Spark is an open-source analytics engine designed to process large volumes of data. It gives users a unified interface for programming whole clusters using data parallelism and fault tolerance. Kubernetes is an open-source container-orchestration platform that automates computer application deployment, scaling, and management.

Think of Spark on Kubernetes as this: Spark provides the computing framework, while Kubernetes manages the cluster. Kubernetes provides users with a sort of operating system for managing multiple clusters. As a result, this technology delivers superior cluster use and allocation flexibility, which translates to massive cost savings.

Should You Run Spark on Kubernetes?

There’s no Spark vs Kubernetes debate. We recommend enterprises deploy Spark on Kubernetes because it’s the more logical and practical approach compared to running Spark on YARN. For one, Spark on Kubernetes environments don’t have YARN’s limitations. YARN’s clusters are complex and consume more compute resources than are needed for a job. Plus, users need to create and tear down clusters for every job in YARN. Not only does this setup waste a lot of resources, which translates to more costs, but it also results in inefficient task management.

On the other hand, Kubernetes has exploded in the big data analytics scene and touched almost every enterprise technology, including Spark. With its growing prevalence and ubiquity, as well as the rapid expansion of its user community, Kubernetes is set to replace YARN as the world’s main big data processing engine.

Is there merit to a Spark vs. Kubernetes argument? Recent trends suggest we are heading in that direction.

Various Spark users are pointing out the advantages of running Spark jobs on Kubernetes over YARN. For one, it’s not difficult to deploy Spark applications into an organization’s existing Kubernetes infrastructure. This results in the fast and seamless alignment of the efforts and goals across multiple software delivery teams.

Second, recent Spark versions (starting with 3.2) have resolved previous performance and reliability issues with Kubernetes. Using Kubernetes to manage Spark jobs leads to better performance and cost savings, surpassing what YARN delivers. Multiple test runs conducted by Amazon revealed a 5 percent time savings when using Kubernetes instead of YARN. Another study demonstrated that Amazon EMR on Amazon EKS can provide up to 61 percent lower costs and up to 68 percent performance improvement for Spark workloads.

Additional benefits of running Spark on K8s are now emerging. But the biggest reason that enterprises should adopt Kubernetes? Enterprises and cloud vendors have expressed support for the framework via the CNCF (Cloud Native Computing Foundation). Spark on Kubernetes is simply the future of large-scale data analytics.

How Does Spark Run on Kubernetes?

When you run Spark on Kubernetes, Spark generates a Spark driver running internally in a Kubernetes pod. The driver then creates executors, which operate within Kubernetes pods, connect to them, and implement the application code.

Once the application is completed, the executor pods terminate. These are then cleaned up, but the driver pod continues to log and persist in a “completed” state in the Kubernetes API. It remains that way until it’s eventually garbage that’s either collected or manually cleaned up.

Efficient Resource Utilization

Businesses are embracing a Spark Kubernetes setup to improve the utilization of their cloud resources. Spark Kubernetes dynamic allocation of resources helps streamline cloud processes and shorten deployment time.

Software engineers, developers, and IT specialists alike love Spark because of its ability to implement and perform computational tasks at a significantly quicker rate than the older MapReduce framework.

If they utilize a Spark on Kubernetes approach, iteration cycles speed up by up to 10 times due to containerization, with reports of five-minute dev workflows reduced to 30 seconds.Containerization using Kubernetes is less resource intensive as hardware-level virtualization, and Spark Kubernetes dynamic allocation results in a dramatic increase in processing.

Easy, Centralized Administration

It’s quite common for Kubernetes Spark projects to have mixes of the different elements available for data handling and Spark job orchestration. These can include various backends and Spark SQL data storage (to mention a few) when users run these Spark components as workloads in a similar Kubernetes cluster results in better performance. This happens as Kubernetes ensures each workload has adequate resources and connectivities to all dependencies, whether in the same cluster or outside of it.

Big Cost Savings

In the world of large-scale data analytics, cloud computing, and pay-as-you-go pricing, every enterprise wants to cut down their costs. In fact, a recent study found that as much as a third of cloud spend goes directly to waste. That is why many enterprises are looking to K8s Spark for efficient resource sharing and utilization. Running Apache Spark on K8s has proven to help enterprises reduce their cloud costs substantially via complete isolation and powerful resource sharing.

How does this cost reduction happen? Users can deploy all their apps in one Kubernetes cluster. As an application finishes, Kubernetes can quickly tear down its containers and quickly reallocate the resources to another application, and optimize Spark Kubernetes configuration based on Spark metrics and other performance benchmarks. The whole process only takes seconds for resources to be moved elsewhere.

The Problems with Spark on Kubernetes

Kubernetes is increasingly important for a unified IT infrastructure, and Spark is the number one big data application moving to the cloud. However, Spark applications tend to be quite inefficient.

These inefficiencies manifest in a variety of ways. Below are some of the biggest stumbling blocks we have found among our customers:

Initial deployment can be among the biggest challenges when running Spark on Kubernetes. The technology is complex. For those unfamiliar with the Kubernetes Spark platform, the framework, language, tools, etc., can be daunting. Pepperdata’s State of Kubernetes 2023 Report revealed that over half of Kubernetes professionals surveyed considered the “steep learning curve required for employees to upskill across software development, operations, and security” as a challenge to K8s adoption, including Spark on K8s adoption.

Running Spark apps on a Kubernetes infrastructure at scale requires substantial expertise around the technology. Even those with considerable Kubernetes knowledge recognize that there are parts to build prior to deployment, such as clusters, node pools, the spark-operator, the K8s autoscaler, docker registries, and more.

Migration

Moving to Kubernetes can put your enterprise in an advantageous position, much like moving to the cloud or adopting big data analytics. But that is only possible if you have a sound and strong strategy prior to migration. Among the many reasons that migration to Kubernetes can be difficult—or result in outright failure—is when leaders decide to adopt a technology without a well-defined plan and implementation strategy.

Additionally, many enterprises lack the prerequisite skills within their organization to navigate a successful migration. Switching to a Spark on K8s architecture requires considerable talent to make the transition smooth and successful. Ignoring or failing to recognize underlying issues with the applications or infrastructure, such as scaling or reliability, can cause migration challenges.

Monitoring and Alerting

Kubernetes is a complex technology, and monitoring can be difficult. More so when you combine Spark with Kubernetes. Choosing the right tool to monitor and assess your Kubernetes implementation adds to this complexity. The volume, velocity, and variety of data flowing through modern large-scale data analytics platforms means that performance monitoring and optimization have outgrown human capabilities. Generic application performance monitoring software is often not designed or configured to handle big Spark on K8s workloads and other big data infrastructures. Effective and powerful Kubernetes and Spark monitoring now requires comprehensive and robust tools that are purposely designed for large-scale data workloads on Kubernetes.

Cost Management

Pepperdata’s State of Kubernetes 2023 Report highlighted another significant obstacle to the adoption of Kubernetes: cost management. Almost 60% of respondents cited “significant or unexpected spend on compute, storage, networking infrastructure, and/or cloud-based IaaS” as an impediment to Kubernetes adoption in general, whether for Spark or other workloads. Furthermore, over half cited cost savings as their primary ROI metric for their Kubernetes deployments, underscoring their focus on cost savings as a driver and success criterion for Kubernetes adoption. With cost and resource discipline becoming an increasing business imperative—and even a competitive differentiator—this scrutiny on cost control will only continue.

Pepperdata: Powerful Observability Plus Cost Optimization for Spark on Kubernetes

The whole ‘Spark vs. Kubernetes' argument has taken the back seat as the advantages of Spark on Kubernetes have become more obvious. Running Spark on Kubernetes effectively achieves accelerated product cycles and continuous operations.

The expansion of data science and machine learning technologies has accelerated the adoption of containerization. This development effectively drives the Spark on Kubernetes approach as a preferred setup for data clustering and modeling ecosystems. Spark on Kubernetes provides users the ability to abstract elastic GPUs and CPUs, as well as its on-demand scalability.

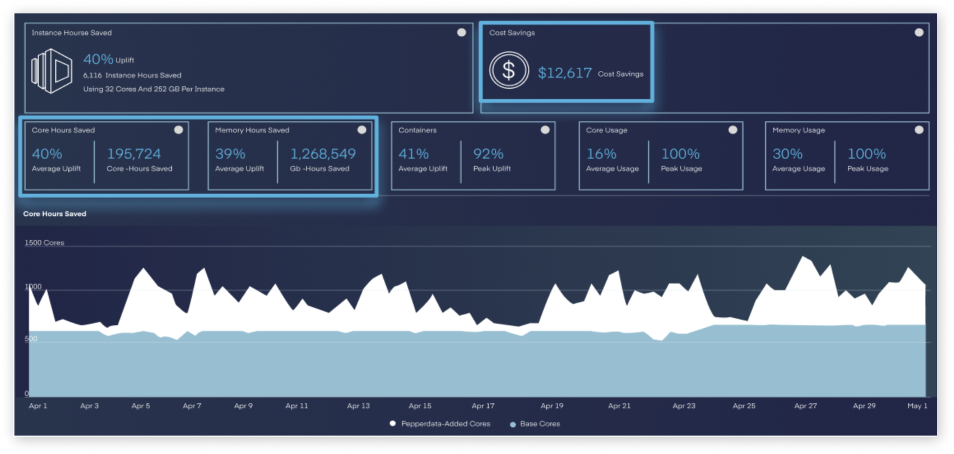

With the increasing adoption of Spark on Kubernetes naturally comes a corresponding increased attention on cost control. For clients ranging from global giants to tech startups, Pepperdata Capacity Optimizer’s ability to continuously and autonomously reduce instance hours and costs and improve utilization in real time through its Continuous Intelligent Tuning has saved millions of dollars. By informing the Kubernetes scheduler of exact resource usage and ensuring that every instance is fully utilized before a new instance is added, Pepperdata delivers 30 to 47 percent immediate cost savings with no manual application tuning and offers a guaranteed ROI between 100 and 662 percent.

Optimization with Enhanced Observability for Apache Spark

Alongside Real-Time Cost Optimization, Pepperdata also provides the option for full-stack observability and real-time insights across all of your Apache Spark on Kubernetes workloads—at no additional cost to you.

For those interested in additional visibility into their Apache Spark cluster and application activity, Pepperdata’s observability dashboard offers deep insights not readily available through general-purpose monitoring or out-of-the-box performance management tools. Pepperdata’s dashboard is a complement to Pepperdata Capacity Optimizer and available at no extra cost to customers of Capacity Optimizer. Pepperdata’s combination of Real-Time Cost Optimization and additional observability empowers you to both optimize and understand the performance and cost of your large-scale data analytics clusters and applications.

Resolve System-Wide Issues with High-Level Cluster Observability

Pepperdata’s observability at the cluster level helps you ensure that your Spark on Kubernetes infrastructure is optimally sized for your unique workloads. Observability at the cluster level also enables you to rapidly diagnose, troubleshoot, and resolve system-wide issues by providing answers to such questions as:

- Why did my cluster slow to a crawl in the middle of the night?

- What resources are currently available at the pod level?

- When and where are bottlenecks occurring in my cluster?

Gain Deeper Insights with Granular Application-Level Observability

Pepperdata’s observability at the application level provides developers the deep insight they need into the performance of tens of thousands (or even more!) Spark applications running concurrently on Kubernetes. Application-level observability speeds issues to resolution by delivering answers to such questions as:

- What are all the applications that ran in my environment in the last month?

- Why is Application X consuming all the available memory and CPU?

- For an application that ran multiple times, which runs were fastest/slowest and why?

Pepperdata’s dashboard also quantifies the dollar cost savings of recovered resources that would otherwise go to waste if Pepperdata Capacity Optimizer were not installed.

Figure 1: With Pepperdata Capacity Optimizer installed, this cloud-based customer recovered approximately 40% of their CPU and memory hours, resulting in a cost savings of over $12,000 on a single cluster in just one month!

It’s easy to get started with Pepperdata Capacity Optimizer and observability dashboards via a free Savings Demo. Pepperdata typically installs in most enterprise clusters in under 60 minutes and starts delivering efficiencies and cost savings as soon as it starts running. Pepperdata also guarantees a minimum of 100 percent ROI, with a typical ROI between 100 and 660 percent.

We also offer free Proofs of Value, which allows organizations to explore the full value of Pepperdata in their environment. Leading companies such as Citibank, Autodesk, Royal Bank of Canada, IQVIA, and those in the Fortune 5 depend on Pepperdata to visualize, manage, and optimize the performance of their large-scale data analytics investments. To learn more or get started, visit pepperdata.com or contact us at sales@pepperdata.com.