Companies running Kubernetes workloads often discover significant and unexpected waste or underutilized resources in their compute environment. Smart organizations implement a host of FinOps activities to mitigate this waste and the cost it incurs:

- Implementing Savings Plans

- Purchasing Spot Instances and Reserved Instances

- Manually tuning their infrastructure platform

- Purchasing Graviton instances

- Rightsizing instances

- Making configuration tweaks

… and the list goes on.

But these are infrastructure-level optimizations that don’t address waste within an application.

Even in Kubernetes environments, most applications are provisioned for peak use, but actually run at peak provisioning levels for only a small fraction of time. As a result, most applications are overprovisioned for CPU and memory—leading to waste or underutilization of resources—right from the start.

On average, typical applications can be overprovisioned by 30 percent or sometimes more. The FinOps Foundation recently reported that workload optimization and waste reduction have become the top priority for cloud practitioners, while the Flexera State of the Cloud 2025 report found that managing cloud spend is the top challenge for 84 percent of cloud decision makers and users.

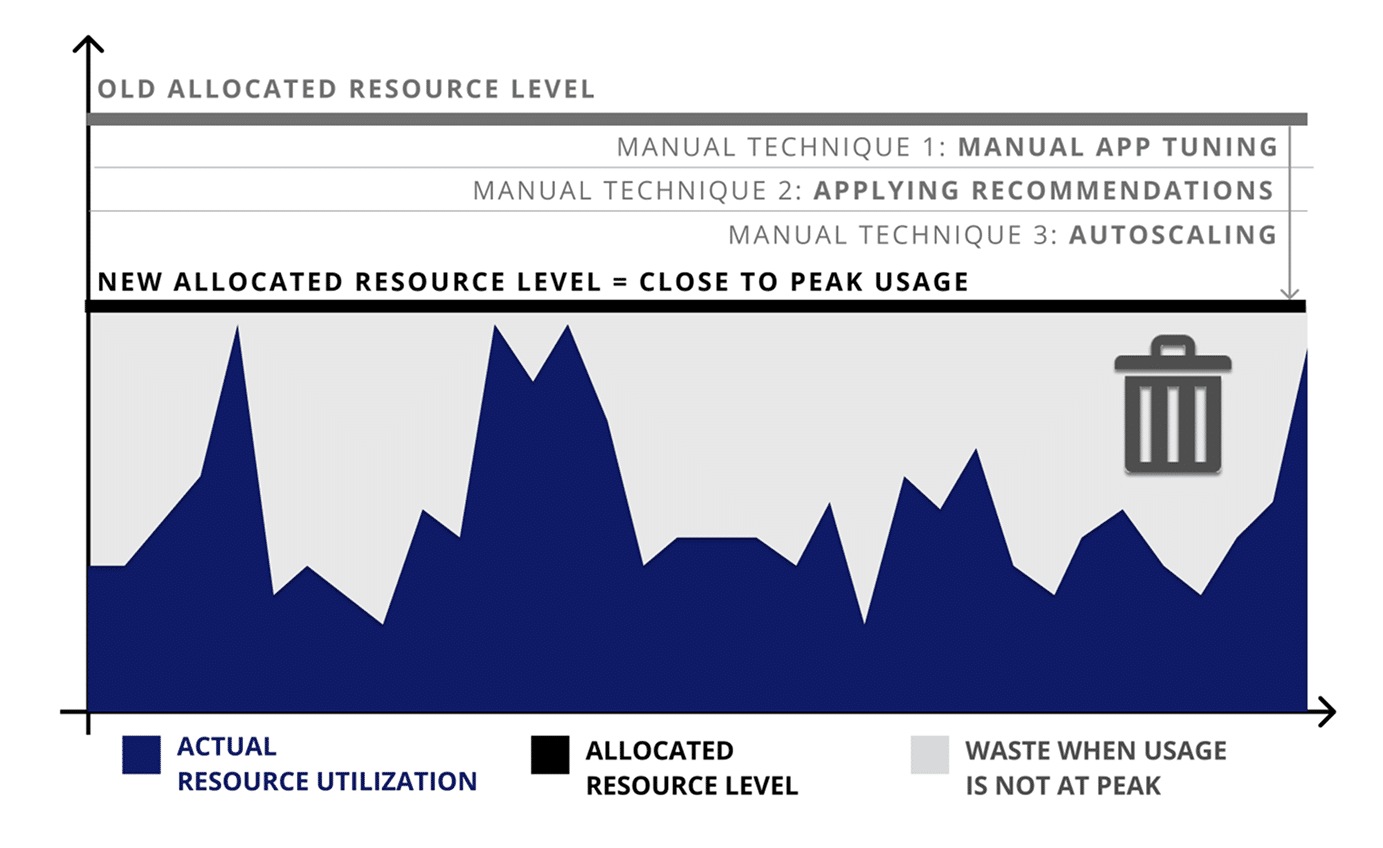

Underutilization Due to “Rightsizing for Peak”

You might be operating the most efficient infrastructure in the world, but if your applications are overprovisioned, they’re going to use that infrastructure inefficiently. And these inefficiencies can significantly impact utilization levels, performance, and cost.

Overprovisioning occurs because of the difference between the developer allocation (typically set at peak usage) and the actual usage. Your developers allocate the memory and compute resources that they think their applications will need at peak, but the reality is that memory and computing usage aren’t static; they’re highly variable and only run at peak for a fraction of the runtime. When an application runs “in the Valley”—which might be as much as 80 or 90 percent of the time the application is not at peak—you’re paying for these underutilized resources every day. Why? Because it’s nearly impossible to allocate or “rightsize” for the runtime Valley.

Various Manual Techniques to Rightsize the Allocated Resource Level

Figure 1: To minimize underutilized CPU and memory, developers try to size their resource requests through techniques like manual tuning, applying recommendations, or autoscaling. However, this manual effort cannot keep up with the real-time dynamism of ever-changing datasets and applications, resulting in inefficiencies and waste when applications run below peak levels.

Let’s look at what happens technically within a cluster system. When allocations are set for peak, the scheduler supplies the compute and memory resources to accommodate the requested allocation levels with no questions asked. Then, when the system looks fully saturated and more applications come along, the scheduler has only two options:

- Put the new workloads or applications into a queue or pending state until resources free up.

- Direct the autoscaler to spin up new instances at additional cost, even though existing resources are not fully utilized.

With only these two options available, you end up either paying for resources you don’t need (wasted resources), or experiencing decreased efficiency as your job throughput decreases unnecessarily.

But Doesn’t Manual Tuning Solve My Waste and Underutilization Problems?

It’s a common thought that manual application tuning can somehow fix this inherent overprovisioning and remediate underutilized resources in Kubernetes environments. But allocating memory and compute power to applications is never a “one and done” operation.

Manual tuning, or “rightsizing” typically only addresses peak usage. However, the application profile or data set might change at any time, requiring allocations to be adjusted accordingly. Running your applications then becomes a neverending “whack-a-mole” task of constantly re-sizing and re-tuning in a futile attempt to approximate actual fluctuating usage levels.

You can’t keep up with automated systems either—even if the most skilled engineer engages to address the gap between the Peak and Valley, the scale of an enterprise environment is too vast for a human to recognize and react to the constant, real time changes of application resource utilization.

Figure 2: Given the scale, complexity, and velocity of most Kubernetes environments, manually “rightsizing” applications to minimize underutilization and waste quickly becomes a game of “whack-a-mole."

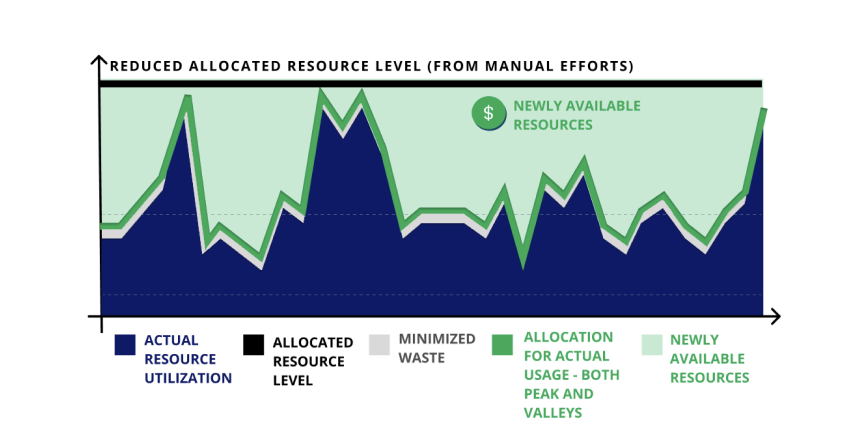

Pepperdata Capacity Optimizer: Automated Resource Optimization for Kubernetes Workloads

Pepperdata solves the problem of underutilized resources by changing the equation. Using patented algorithms, Pepperdata software provides the scheduler with real-time visibility into what’s actually available in your cluster, second by second, node by node, instance by instance, so you stay “rightsized” at peak, and also in the Valley.

By analyzing resource usage in real time, Pepperdata Capacity Optimizer automatically and continuously communicates with the Kubernetes system scheduler to add tasks to nodes with available resources—spinning up new pods only when existing pods are fully utilized. The result: compute and memory utilization are automatically increased by up to 80% without the need for ongoing manual tuning or applying recommendations.

With this uplift in application resource utilization, Pepperdata Capacity Optimizer reduces both compute and memory costs by an average of 30 percent—automatically, continuously, and in real time—even in the most highly FinOps-configured clusters.

Real-time resource optimization is something that manual tuning just can’t achieve, especially at scale, no matter how many developers are assigned to the task.

Pepperdata Patented Algorithms Enable the Scheduler to Make Optimal Use of Resources During the Valleys

Figure 3: Pepperdata dynamically rightsizes resource allocations not just for peak usage, but also for the low-activity valleys where most waste occurs. This allows it to reclaim wasted capacity during the long stretches of underutilization, delivering a level of improved utilization, boosted performance, and cost savings that no other solution can match.

And all of this is configurable. If your goal is 85 percent maximum utilization (assuming sufficient workload is there to achieve that utilization rate), you can choose your level of optimization so that Capacity Optimizer will automatically and continuously work with the Kubernetes scheduler to always hit 85 percent utilization on a node by node basis. Pepperdata software also has the intelligence to back off as the utilization gets close to the target you set.

Automatically Rightsize for Peaks and Valleys

You don’t need an engineering sprint or a quarter to plan to experience the benefits of Pepperdata. In just a few hours, through a FREE Trial/Proof of Value (POV), you’ll know how much you can save per day and over time with no manual tuning.

Pepperdata pays for itself, immediately decreasing instance hours/waste, increasing utilization, and freeing developers from manual tuning to focus on revenue-generating innovation. Customers enjoy Pepperdata's 100% ROI guarantee and a typical ROI ranging from 100 to 660 percent, and they only pay when they save.