As a platform engineer, you’re tasked with running complex workloads—Apache Spark jobs, AI/ML pipelines, batch ETL—across dynamic Kubernetes environments. Performance matters. Time spent tuning matters. And so does cost.

But if you’re still relying on manual resource tuning to optimize your workloads, you’re playing a losing game.

Sure, you can tweak CPU and memory requests by hand. You can comb through Prometheus metrics, look at job logs, and estimate peaks. But Kubernetes doesn’t make that easy—and at scale, it’s practically impossible to stay ahead.

Here’s why manual tuning doesn’t work—and what actually does.

The Nature of Data Workloads Running on Kubernetes

Let’s get one thing straight: Kubernetes wasn’t originally built with data-intensive workloads in mind. It’s come a long way, but jobs like Spark, Presto, Ray, or TensorFlow training still introduce unique challenges:

- They’re bursty — CPU and memory usage can spike 10x for a few minutes, then idle.

- They’re variable — The same job can use vastly different resources depending on data size.

- They’re ephemeral — Jobs spin up and down quickly, making it hard to collect historical performance data.

Now try rightsizing that by hand. It’s like trying to tune an engine that rewires itself every time you turn the key. While developers or platform engineers try to manually manage levels of compute and memory needed to power their applications, too many factors exist for manual resource tuning to be effective.

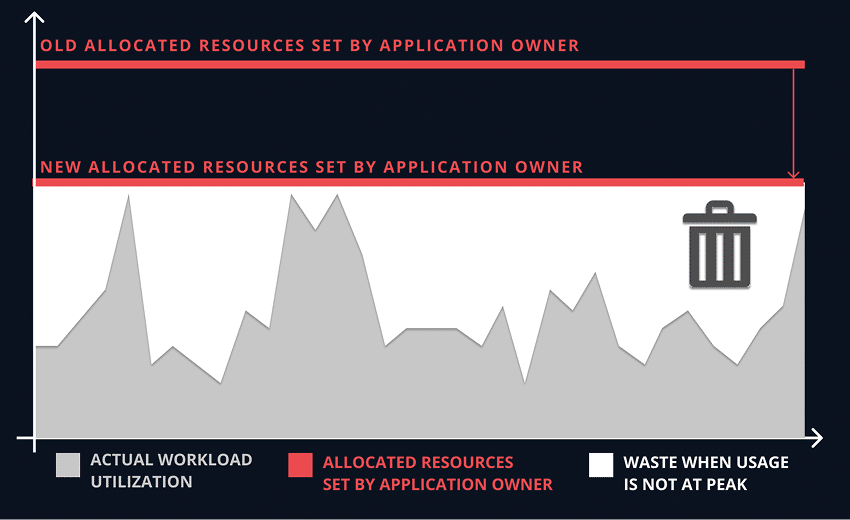

Developers and Platform Engineers Trying to Allocate Resources

Figure 1: Developers try to reduce the allocated resource level, but it’s impossible to adjust in real time for the actual peaks and valleys of varying resource utilization.

The Limitations of Manual Tuning

1. You’re Tuning Based on Averages, Not Spikes

Most developers and data platform engineers tune based on average usage metrics. But with Spark, a shuffle stage or join can blow past “normal” usage for just a few minutes—and crash the job if you’re not prepared. So, what do they do? Overprovision. A lot.

2. You Waste Time and Still Miss the Mark

Even if you have time to analyze metrics from kube-state-metrics, Prometheus, or Spark UI logs, you’re still reacting after a failure or bottleneck. And those tuning changes you just made? They’re static. Next week’s data volume could make them irrelevant.

3. You’re Paying for Headroom You Rarely Use

To avoid OOM kills, throttling, or failed jobs, teams pad their resource requests. That leads to inflated CPU/memory reservations, poor bin packing, and inefficient autoscaler behavior. Kubernetes schedules based on requests, not usage—so overprovisioned jobs hog space and trigger unnecessary node scale-ups.

This is especially painful when you’re orchestrating hundreds of short-lived jobs across shared clusters.

You’re Not Alone: Most Data Teams Overprovision

In most Kubernetes environments we analyze, 50–70 percent of requested CPU and memory is never used. And data-intensive workloads such as AI/ML tend to be the worst offenders.

Consider a typical application like what we see every day at Pepperdata:

- A Spark executor requests 8GiB memory and 2 vCPU.

- Actual peak usage is 4 GiB and 1 vCPU.

- The difference you're paying for but not using is 4 GiB and 1 vCPU.

- Multiply that by 100 pods in a busy node—and you’ve got serious underutilization.

The Costs of Manual Tuning:

Using the example above of a typical application with overprovisioning waste of 4 GiB and 1 vCPU, you’ll see how costs can spiral. If the app were running on an AWS m5.xlarge instance at the current on-demand rate of $0.192/hour, the overprovisioning would generate waste that would cost about $3,500/month. And that's just for a single node on a single cluster. Multiply $3,500 by hundreds or thousands of nodes and the waste due to overprovisioning can cost hundreds of thousands per month.

And you’re not just wasting cloud dollars due to overprovisioned resources—you’re constraining job throughput and reducing overall cluster efficiency, plus tying down your valuable engineers to manual performance tuning hours that could be better spent on higher value projects.

So What’s the Fix?

Real-Time, Automated Resource Optimization

The answer isn’t more dashboards or config tuning—it’s automated continuous optimization that adapts in real time to your workloads’ ever-changing needs.

That’s where Pepperdata comes in.

How it Works:

Pepperdata Capacity Optimizer is a real-time, automated Kubernetes resource optimization solution that understands the actual, second-by-second workload utilization requirements and then provides this information to the scheduler so that it can pack pending pods on existing nodes as optimally as possible based on actual hardware utilization.

Capacity Optimizer also works with autoscalers (including the default Cluster Autoscaler and Karpenter) to ensure that new nodes are launched only when existing nodes are truly packed to their optimal capacity based on actual resource utilization. (In fact, Pepperdata customers have noted up to a 71 percent decrease in the use of autoscaling when Capacity Optimizer is enabled.)

Through these two mechanisms, Capacity Optimizer increases utilization levels by up to 80 percent and delivers an average 30 percent cost savings automatically, continuously, and in real time with no application code changes.

Capacity Optimizer can even optimize applications that run for just a few seconds, because it optimizes resources on nodes, not the applications themselves.

With Capacity Optimizer, developers are freed from manual tuning with an automated solution that pays for itself—saving them time to focus on revenue-generating innovation and helping companies reclaim otherwise wasted resources to maximize ROI for their spend in the cloud.

Summing Up: The Value of Pepperdata's Intelligent Kubernetes Optimization

As we have seen, Capacity Optimizer signals to the scheduler that more pods can be launched on existing nodes before the autoscaler scales up new nodes. As a result of this intelligence:

- Workloads on nodes can be scheduled based on real-time physical utilization.

- Pods are launched with optimized resource requests.

- The scheduler can make more accurate and efficient resource decisions with Pepperdata-provided data.

- The autoscaler can scale up more efficiently based on actual utilization.

Your Benefits:

- Improved utilization by up to 80 percent for GPU, CPU, and memory with real-time resource optimization

- Time saved and higher job throughput without manual tuning, applying recommendations, and application code changes

- Repurpose engineering time to higher value strategic innovation

- 30 percent or more average cost reduction for data workloads on Kubernetes

And unlike the Vertical Pod Autoscaler, which uses trailing averages and reacts relatively slowly, Capacity Optimizer makes decisions based on real-time conditions, not historical trends that may no longer be accurate. (Past performance is not always the best predictor of future behavior in today's highly dynamic compute environments!)

Focus on Optimized Performance and Cost, Not Tuning

As a developer or data platform engineer, your goal isn’t to guess CPU limits—it’s to deliver high-performing applications and reliable, cost-efficient infrastructure that scales.

Manual tuning won’t get you there. It’s reactive, time-consuming, and fundamentally flawed for fast-changing, highly-scaled, data-intensive workloads. While manual tuning can be a helpful first step toward reducing overall resource utilization, it can never do what Capacity Optimizer does: automatically pack running pods more tightly onto a single node, and prevent an autoscaler from spinning up costly nodes that aren't truly needed.

Automated optimization eliminates the need for manual tuning—so you can focus on enabling your teams and revenue-generating innovation, not firefighting misconfigurations.