-

- Common Microservices Deployment Patterns in Kubernetes

-

- Autoscaling as a Design Pattern

- The Challenge with Scaling Patterns

- Why Optimization is Essential

-

- The FinOps Team

- Key Principles to a FinOps Framework

- The FinOps Lifecycle

-

- Why Microservices Workloads Strain Kubernetes

-

- How Pepperdata Dynamic Resource Optimization Works for Microservices Workloads on K8s

Microservices architecture has become a de facto standard for modern application development. But without effective microservices optimization, organizations face rising costs, wasted resources, and inconsistent performance. Pepperdata addresses these challenges with Dynamic Resource Optimization for Microservices.

The Benefits of Microservices Architecture and Containers in Kubernetes

Microservices architecture enables modular design, independent updates, and faster iteration by splitting an application into a separate set of services that are managed by individual developer teams. When combined with containers in Kubernetes, microservices provide portability and flexibility across cloud platforms.

This combination allows for the application to be managed by multiple teams focused on improving and innovating the service assigned to them. That said, with multiple services each requesting resources for their own purpose and operating independently, the increased complexity requires effective microservices optimization.

Consider a modern ecommerce application which might be comprised of the following microservices:

- Search service handles product queries.

- Cart service manages user shopping activity.

- Inventory service maintains stock accuracy.

- Recommendation service personalizes offerings.

- Checkout service processes payments.

Each of these services can be independently developed, deployed, and scaled. If demand surges for search (e.g., Black Friday traffic), the search microservice could be scaled independently of the others—no need to overprovision the entire application stack. That said, communication is critical across all these teams working on the application, and that can be unreliable for areas such as cost optimization.

Enter Kubernetes—it orchestrates these containerized microservices, automating deployment, providing built-in service discovery, load balancing, rolling updates, and resilient failure recovery. It’s why containerized, microservices architectures on Kubernetes have become the backbone for scalable, reliable enterprise applications.

Common Microservices Deployment Patterns in Kubernetes

- Sidecar Pattern: Extend a primary service with an auxiliary container (e.g., monitoring, logging, proxy).

- Ambassador Pattern: Route external requests through proxy containers to simplify communication.

- Adapter Pattern: Transform legacy outputs or protocols to work with modern APIs.

- Event-Driven Pattern: Trigger microservices asynchronously using message queues or event streams.

- Saga Pattern: Manage long-running, distributed transactions across multiple microservices.

Kubernetes simplifies adopting these microservices deployment patterns by automating scaling, networking, and service discovery for containerized applications. Whether it’s an ecommerce workload or AI-driven inference service, patterns like Sidecar or Saga help teams maintain modularity, observability, and resilience.

Scaling and Microservices Design Patterns on Kubernetes

One of the most important aspects of microservices design patterns is how they handle scaling. In containerized environments, scaling is essential to ensure services keep pace with demand spikes—whether in ecommerce applications during peak shopping seasons or AI-driven workloads that surge unpredictably.

Autoscaling as a Design Pattern

- Horizontal Pod Autoscaler (HPA): Adjusts the number of pods based on metrics like CPU or memory usage.

- Cluster Autoscaler / Karpenter: Provisions or removes nodes in response to pod scheduling needs.

- Vertical Pod Autoscaler (VPA): Recommends or sets pod resource requests and limits.

Together, these autoscaling practices represent a scaling-focused design pattern within microservices: the ability to grow and shrink resources dynamically. This pattern is critical for maintaining performance and cost control across containers in Kubernetes.

The Challenge with Scaling Patterns

While these scaling strategies are foundational, they also introduce challenges:

- Overprovisioning by default: Autoscalers often act on allocated resources rather than actual usage, triggering unnecessary node scaling.

- Inefficient utilization: Pods may request more than they need, leaving clusters underutilized.

- Reactive behavior: Scaling decisions happen after thresholds are breached, leading to lag in response.

Why Optimization is Essential

To make microservices design patterns for scaling truly effective, they must be paired with smarter, workload-aware optimization. Instead of scaling based on inflated requests, clusters should scale on real-time utilization. This ensures:

- Autoscalers only add capacity when containers are genuinely at capacity.

- Workloads are packed more densely, improving throughput.

- Cloud costs are reduced by preventing unnecessary node spin-ups.

In other words, scaling patterns are necessary—but without optimization, they create waste.

Why LLMs on Microservices Will Soar—and Why They Demand Smarter Kubernetes Deployment

With the modern application also comes the modern LLM. Rather than rewrite lines and lines of code so it can be fashioned into a Generative AI app, developers can deploy and build an LLM as a separate microservice to employ a GenAI component into their application.

Why microservices are a perfect fit for LLM inference:

- Modular, scalable inference components: Embed LLM services (e.g., prompt processing, embedding generation, summarization) as discrete, containerized microservices.

- Independently optimized scaling: If inference requests spike, scale just that microservice.

- Reduced cold-start latency: Keep model-serving containers warm.

Yet, many organizations overprovision GPUs to their service “just in case”—paying for idle capacity to ensure performance stability.

The Challenges of Running Microservices on Kubernetes

Without an automated optimization solution, microservices workloads running in Kubernetes environments suffer from overprovisioning that leads to wasted resources, and inefficient autoscaling.

Optimization methods are often limited to instance rightsizing, employing Karpenter, and manual tuning—which aren’t sustainable at scale.

Enterprises must adopt automated approaches to continuously optimize microservices workloads and control cloud costs.

Why Microservices Workloads Strain Kubernetes

The resources required for dynamic workloads on Kubernetes shift constantly. Developers can only react so quickly to changing resource requests from within their workload, and with this workload split into separate services vying for resources of their own, developers will request more resources just to ensure their service is running. And if only a set amount of resources are allocated to the entire workload, one microservice may be starved of GPU, CPU, or memory required for it to run effectively.

Static rightsizing and observability alone cannot solve the inefficiencies. Common myths persist, such as believing Karpenter is an all-in-one solution to rightsizing and autoscaling. In reality, microservices optimization requires continuous, automated alignment of CPU and memory requests to actual, real-time resource usage.

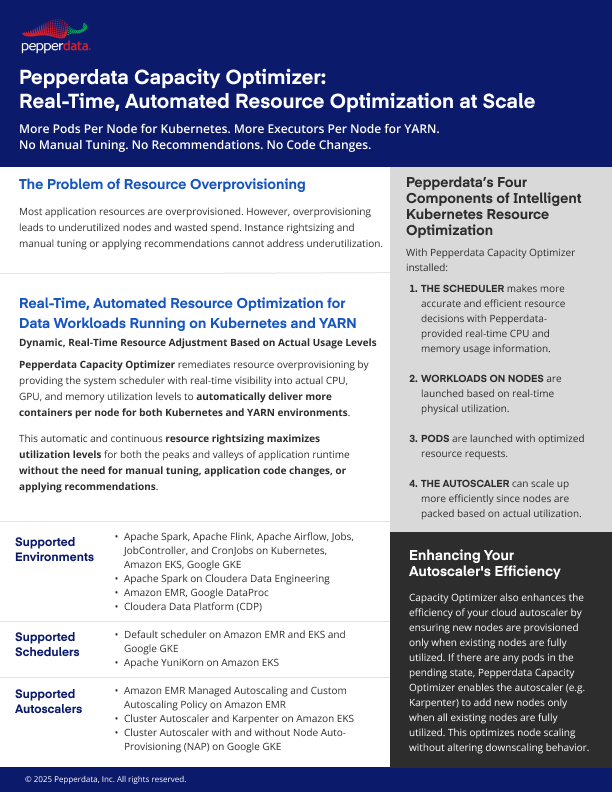

The Pepperdata Approach to Kubernetes Microservices Optimization

Pepperdata delivers Dynamic Resource Optimization to optimize microservices workloads without the need for manual tuning, applying recommendations, or changing application code. With Pepperdata, organizations can achieve up to 75% cost savings for their microservices workloads through increased utilization, enhanced autoscaling efficiency, and boosted throughput.

Particularly for microservices workloads deployed through Kubernetes services like Amazon EKS, optimization with Pepperdata is fully automatic with a 100% ROI so you only pay for what you use.

How Pepperdata Dynamic Resource Optimization Works for Microservices Workloads on K8s

- Decreases the amount of overprovisioned resources) resulting in more pods per node, greater utilization, and lower cost.

- Enhances the efficiency of the cloud autoscalers (including Karpenter) by ensuring that new nodes are provisioned only when the existing nodes are fully utilized.

- Optimization can be done at the service level, namespace level, or cluster level.

Getting Started with Microservices Optimization

Checklist:

- Deploy Pepperdata with a Helm chart depending which environment you’re running your microservices applications.

- Run Pepperdata to optimize your microservices workloads in real time.

- Evaluate the savings difference between before Pepperdata was enabled and after Pepperdata enabled.

FAQ — Myths vs. Reality & Technical Insights

It continuously rightsizes resources for containers in Kubernetes after observing for 24 hours, ensuring optimized placement and reducing waste.

Autoscalers scale based on allocated requests, not real usage. Pepperdata ensures true utilization drives scaling.

No—it’s fully complementary to tools like HPA and instance rightsizing for Kubernetes workloads by optimizing pod requests at launch without restarts.

Deploy in under an hour and begin achieving cost optimization microservices within a day.

Visit the FAQ page for more technical information about Pepperdata Capacity Optimizer.