Pepperdata is the only cost optimization solution that automatically optimizes cost and utilization rates at the application framework layer and is therefore complementary to all your other cloud cost optimization efforts. Pepperdata Capacity Optimizer is able to reduce cloud costs in some of the world’s largest clusters by eliminating waste in real time. For more information on what we do that makes us different, check out Pepperdata’s Secret Sauce.

Pepperdata makes your current cloud autoscaler work better. Cloud autoscalers spin up new instances when all existing resources have been allocated, but cloud autoscalers are not able to recognize the difference between allocated resources and actual utilized resources. Pepperdata enables the cloud autoscaler scheduler or cluster manager to schedule workloads based on resource utilization instead of resource allocation which means that with Pepperdata, you only pay for what you use. Pepperdata not only maximizes the utilization of each of the existing instances, it also ensures that the new instances are added only when the existing instances are fully utilized in an autoscaling environment.

Pepperdata supports the following:

- Amazon EMR: Batch workloads like Spark

- Amazon EKS: Batch workloads like Spark

Pepperdata works with some of the world’s largest and most complex, multi-tenant, and highly-scaled computing environments in the world, including two of the Fortune 10 and others in the Fortune 100 and 500.

Typically anywhere from 10% to 50%, depending on the type of workload.

Pepperdata starts delivering cost savings immediately once it’s installed and typically pays for itself in hard cloud cost savings within a few months. Typical ROI is between 100% (meaning all costs are paid for by the saving Pepperdata enables within a few months) and up to 662%. The automated, real-time optimization that Pepperdata enables saves customers running batch workloads an average of 30%. One of the largest companies in the world recently installed Capacity Optimizer on a single cluster and shaved 28% off their cloud cost. In the case of this customer, that cost savings translates to over $400,000 in reduced cloud costs per year—for a single cluster. A small startup recently experienced a 30% cloud cost reduction within a week of running Pepperdata, for a monthly savings of approximately $7,800.

In addition you will also enjoy the soft cost savings of reduced personnel expenditures. You won’t need dedicated engineers constantly monitoring your systems or tweaking and tuning applications. In addition, your finance personnel will no longer need to corral your engineering teams to implement recommendations. With Pepperdata, your development teams can be freed from the tedium of tweaking and tuning code to focus on high-value, innovative activities to grow your business.

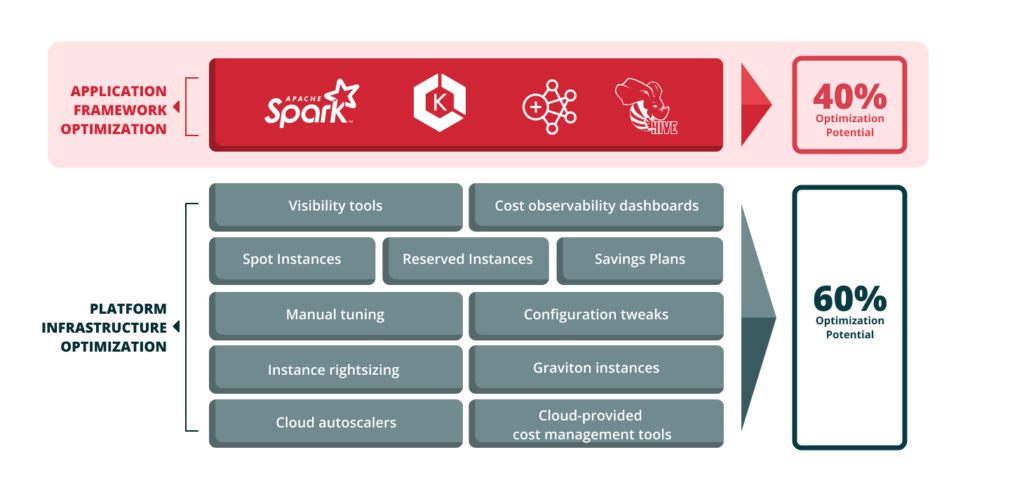

Yes, most cloud optimization solutions work at the platform, infrastructure, or hardware level. Pepperdata optimizes at the application framework level and, as such, is complementary to all other solutions in the marketplace that work at the platform, infrastructure, or hardware level. Continue to do what you’re doing to optimize your cloud platform using your existing tooling and processes, such as buying Reserved Instances, Spot Instances, and Savings Plans at the platform layer. Then implement Capacity Optimizer on top of it to optimize your applications and achieve additional application efficiency and cloud cost reduction!

Pepperdata typically installs within an hour on most enterprise environments, via a bootstrap script or Helm chart. Within a few hours after that, you will start to see waste and cost savings on your Pepperdata-provided dashboard. You should start to see the hard cost savings on your next cloud bill.

- For batch workloads like Spark, Pepperdata goes to work immediately saving you costs. You should start to see savings within about 24 hours.

Applications can run per usual without any code changes or manual tuning required.

If you use a handful of applications in the cloud, an engineer might help you optimize that workload. However, with larger-scale operations, it is impossible to do what Capacity Optimizer does. Capacity Optimizer works directly with the native YARN or Kubernetes scheduler to make hundreds and thousands of decisions in real time, around the clock. Capacity Optimizer operates in the background, autonomously and continuously, optimizing your cloud environment in real time in a way that far exceeds what even the most diligent engineer would be able to accomplish.

Pepperdata is built upon the same safe, secure, reliable platform that has been deployed to some of the most demanding enterprises for over a decade, including global banks and Fortune 10 companies. We would be happy to provide you a security white paper and engage with your teams on any questions they have about security and reliability.

Pepperdata’s pricing is based on your usage. We guarantee a 100% ROI in four months.

Yes! We welcome the opportunity to bring the same cost savings we see with leading enterprises into your environment. Contact us to get up and running with a Free 5-Day Cost Optimization Proof of Value (POV).