REPORT

Pepperdata Decreases Instance Hours/Cost by 38% on Amazon EMR

This report covers Pepperdata’s initial benchmarking work in 2021 based on TPC-DS, an industry-standard big data analytics Decision Support framework from the Transaction Processing Performance Council.

While not an official audited benchmark as defined by TPC, this work demonstrates the performance, efficiency, and cost improvements Pepperdata Capacity Optimizer delivers on Amazon EMR in addition to the benefits of the standard AWS Custom Auto Scaling Policy.

Key Findings:

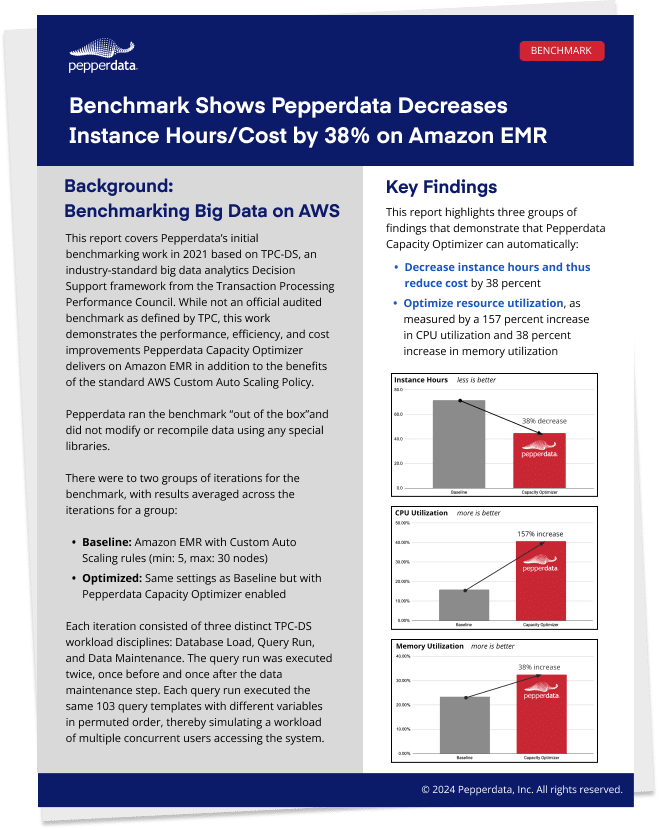

This report highlights three groups of findings that demonstrate that Capacity Optimizer can automatically:

Decrease instance duration

Optimize resource utilization

Reduce costs by 38%

Big Data Benchmarking in the Cloud

Benchmarking is the process of running a set of standard tests against some object to produce an assessment of that object’s relative performance. Imagine driving three different sports cars on the same course and measuring each car’s maximum speed, torque, and fuel consumption to compare the overall performance of the three cars.

This report covers our initial work with TPC-DS, the Decision Support framework from the Transaction Processing Performance Council. TPC-DS is a sophisticated, industry-standard big data analytics benchmark developed over decades and is a de facto standard for SQL including Hadoop. Our work is not an official audited benchmark as defined by TPC.